Arkheion and the Dragon, part II

By the end of last week's post/parable we had Library of Congress (LoC) name authority IDs for many of our person and corporation names, but had a lot of uncertainty as to whether these IDs had been matched correctly. The OpenRefine script we were using to query VIAF for LoC IDs also didn't support searching for any control access types beyond person and corp names.

We weren't quite satisfied with this, so after looking into some of our options, we decided to try a new approach: we would move from OpenRefine to Python for handling VIAF API queries and data processing, add a bit of web scraping, then use more refined fuzzy-string matching to remove false-positives from the API results. By the time we had finished, we had confirmed LoC IDs for over 6500 unique entities (along with ~2000 often hilariously wrong results) and, as an added benefit, were able to update many of our human agent records with new death-dates. All told the process took about a day.

Here's how we did it:

The VIAF API

OCLC offers a number of programmatic access points into VIAF's data, all of which you can see and interactively explore here. Since we're essentially doing a plain-text search across the VIAF database, the "SRU search" API seemed to be what we were looking for. Here is what an SRU search query might look like:

http://viaf.org/viaf/search?query=[search index]+[search type]+[search query]&sortKeys=[what to sort by]&httpAccept=[data format to return]

Or, split into its parts:

http://viaf.org/viaf/search

?query=[search index]+[match type]+[search query]

&sortKeys=[what to sort by]

&httpAccept=[data format to return]

There are a number of other parameters that can be assigned - this document gives a detailed overview of what exactly every field is, and what values each can hold. It's interesting to read, but to save some time here is a condensed version, using only the fields we need for the reconciliation project:

- Search query: how and where to find the requested data. This is itself made up of three parts:

- Search index: what index to search through. Relevant options for our project are:

- local.corporateNames: corporation names

- local.geographicNames: geographic locations

- local.personalNames: names of people

- local.sources: which authority source to search through. "lc" for Library of Congress.

- Match type: how to match the items in the search query to the indicated search index -- e.g. exact("="), any of the terms in the query ("any"), all of the terms ("all"), etc.

- Search query: the text to search for, in quotes

- Search index: what index to search through. Relevant options for our project are:

- Sort keys: what to sort the results by. At the moment, VIAF can only sort by holdings count ("holdingscount").

- httpAccept: what data format to return the results in. We want the xml version ("application/xml")

Putting it all together, if we wanted to search for someone, say, Jane Austen, we would use the following API call:

http://viaf.org/viaf/search

?query=local.personalNames+all+"Jane Austen"+and+local.sources+=+lc

&sortKeys=holdingscount

&httpAccept=application/xml

The neat thing about web APIs is that you can try them out right in your browser. Check out the Jane Austen results here! It's an xml document with every relevant result, ordered by number of holdings for each entry worldwide, and including including full VIAF metadata for every entity. That's a lot of data when all we're looking for is the single line with the first entry's LoC id. This is where Python comes in.

Workflow:

Before we dive in to the code, here is the high-level workflow we ended up settling on:

- Query VIAF with the given term

- If there's a match, grab the LoC auth id

- Use the LoC web address to grab the authoritative version of the entity's name.

- Intelligently compare the original entity string to the returned LC value. If the comparison fails, then we treat the result as a false positive.

Let's dig in!

VIAF, LC, and Python

First, we wrote an interface to the VIAF API in python, using the built-in urllib2 library to make the web requests and lxml to parse the returned xml metadata. That code looked something like this:

You can see above that the search function takes three values: the name of the VIAF index to search in (which matches to one of our persname, corpname, or geogname tags), the text to search for, and the authority to search within (here LC, but it could be any that VIAF supports).

With the VIAF search results in hand, our script began searching through the xml metadata for the first, presumably most relevant result. All sorts of interesting stuff can be found in that data, but for our immediate purposes we were only interested in the Library of Congress ID:

Now that we had the LC auth ID, we could query the Library of Congress site to grab the authoritative version of the term's name. Here we used BeautifulSoup, a python module for extracting data from html:

Now we had four data points for every term: Our original term name, an unvetted LoC ID number and name, and the type of controlaccess term the item belongs to (persname, corpname, or geogname). As before, there were a number of obvious false-positives, but there were enough terms that we did not have nearly enough time to check through them individually. As Max hinted at in last week's post, this was fuzzywuzzy's time to shine.

Fuzzy Wuzzy was (not) a bear

(also not a Rudyard Kipling poem)Max gave an overview of fuzzywuzzy, but just as a refresher: it's a python module with a variety of methods for comparing similar strings under different lenses, all of which return a "similarity score", out of 100. Here is what a very basic comparison would look like:

This is fine, but it's not very sophisticated. One of fuzzywuzzy's alternate comparison methods is much better suited for our purposes:

The token_sort_ratio comparison removes all non-alphanumeric characters (like punctuation), pulls out each individual word, puts them back in alphabetical order, and then runs a normal ratio check. This means that things like word order and esoteric punctuation differences are ignored for the purposes of comparison, which is exactly what we want.

Now that we had a method for string comparisons, we could start building more sophisticated comparison code - something that returns "True" if the comparison is successful, and "False" if it isn't. We started by writing some tests, using strings that we knew we wanted to match, and strings we knew should fail. You can see our full test suite here.

As a result of our testing, we decided we would need to have unique comparison methods for each type of controlaccess term we were testing - one for geognames, one for persnames, and one for corpnames. Geognames turned out to be easiest - in that case our test criteria was matched with a basic token_sort_ratio check - names were deemed correct when they had a fuzz score of 95 or higher. Both persnames and corpnames turned out to need a bit more processing before we had satisfactory results. Here is what we came up with:

With this script in hand, all we had to do was run our VIAF/LC data through it, remove all results that failed the checks, then use the resulting data to update our finding aids with the vetted LoC authority links (while removing all the links we had added pre-vetting). Turns out, we ended up with > 4200 verified unique persname IDs, ~1500 IDs for corpnames, and 800 geogname IDs, all of which we were able to merge back into our EADs using many of the methods Max described last week. We also output all the failed results, which were sometimes hilarious: apparently VIAF decided that we really meant "Michael Jackson" while searching for "Stevie Wonder". And, no, Wisconsin is not Belgium, nor is Asia Turkey.

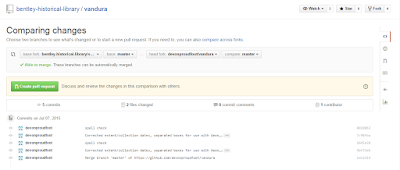

This also gave us a great opportunity to update all of our persnames with death-dates if the LoC term had one and we did not. You can check out our final GitHub commit here - we were pretty happy with the results!.

Postscript

In retrospect there is a lot we could improve about the process. We played things conservatively, particularly for persnames, so our false-positive checking code itself had a number of false-positives. Our extraction of LoC codes from the VIAF search API could be a lot more sophisticated than just mindlessly grabbing the first result - our fuzzy comparisons did a lot to mitigate that particular problem, but since VIAF sorts its results by number of holdings worldwide rather than by exact match, we were left to the capricious whims of international collection policies. The web-request code is also fairly slow - since we didn't want to inadvertently DDoS any of the sites we're querying (and we'd rather not be the archive that took down the Library of Congress website), we needed to set a delay in between each request. When running checks against >10,000 items, even just a one second delay adds up. Even so, it still runs in an afternoon -- orders of magnitude faster than manual checking.

We hope you've found this overview interesting! All code in the post is freely available for use and re-use, and we would love to hear if anyone else has tried or is thinking of trying anything similar. Let us know in the comments!