A

previous post in this series looked at lessons from "

No Silver Bullet: Essence and Accidents of Software Engineering" and how they apply to

ArchivesSpace, an open source archives information management application we'll be using as part of our

Archivematica-ArchivesSpace-DSpace Workflow Integration project.

Today, I'd like to continue in that vein (i.e., that none of these pieces of software are perfect, and that they don't meet every one of our needs, but in the end that's OK) and take a look at what we can learn about

Archivematica from a genre of literature and other artistic works that I'm rather fond of: dystopian fiction.

Welcome to Dystopia

A

dystopia is an imaginary community or society that is undesirable or frightening; it literally translates to "not-good-place." Dystopian fiction--a type of speculative fiction because it's generally set in a possible future--usually involves the "creation of an utterly horrible or degraded society headed to an irreversible oblivion."

[2]

Setting the Scene: Characteristics of Our [Future] Dystopian Society

If there's one thing you can say about those of us who are interested in digital curation and preservation, it's that we're wary of the very real possibility of our future being such a "not-good-place." Here are three (and a half) reasons why:

1. Society is an illusion of a perfect utopian world.

|

| In The Matrix, a 1999 film by the Wachowski brothers, reality (not quite a utopia, but still) as perceived by most humans is actually a simulated reality called "the Matrix," created by sentient machines to subdue the human population. [3] |

The first tell that you're living in a dystopia is that things are "pretty perfect," and I'd argue that, for the casual user of digital material, things certainly seem "pretty perfect." For various reasons, including the fact that for the majority of us the complex technology stack needed to render them is "

invisible," digital materials

appear as if they'll be around forever (for example, I spend a good deal of time looking for things to link to on this blog, knowing all the while that the

lifespan of a URL is, on average, 44 days), or that you can preserve them by just "leaving them on a shelf" like you would a book (bit rot!). However, whether it's due to file format obsolescence or storage medium corruption or insufficient metadata or issues with storage or organizational risks (or...or...or...), in reality digital materials are much more fragile than their physical counterparts. This illusion of permanence has all kinds of implications, not the least of which is that it can be difficult to convince administrators that digital preservation is a real thing worth spending money on.

Whether we subscribe to a full-blown "

digital dark age" as asserted by Terry Kuny at the 1997 International Federation of Library Associations and Institutions (IFLA) Council and General Conference (barbarians at the gates and all!), or our views are a bit more

hopeful, all of us in the field know that there are

many, many threats to digital continuity, and that these threats jeopardize "

continued access to digital materials for as long as they are needed." (That's from my favorite definition of digital preservation, by the way.)

2. A figurehead or concept (OAIS, anybody?) is worshiped by the citizens of the society.

|

| In Nineteen Eighty-Four, written in 1948 by George Orwell, "Big Brother" is the quasi-divine Party leader who enjoys an intense cult of personality. [4] |

A second clue that you're living in a dystopia is that a figurehead or, in our case, concept, is worshiped by the citizens of the society.

While "worship" may be a bit strong for the relationship that the digital curation community has with the Open Archival Information System (

OAIS) Reference Model, you can't argue that it "enjoys an intense cult of personality." It informs everything we do, from systems to audits to philosophies. Mike likes to joke that every presentation on digital preservation has to have an "obligatory" OAIS slide. I like to joke that OAIS is like a "secret handshake" among our kind. Big Brother is watching!

I'm not trying to imply that OAIS's status is a bad thing. However, it does lead us to another, related characteristic of a dystopian society (and this is the half): strict conformity among citizens and the general assumption that dissent and individuality are bad. Don't believe me? Gauge you're reaction when I say what I'm about to say:

We don't create Dissemination Information Packages (DIPs).

That's right. We don't. Just Archival Information Packages (AIPs). [

Gasp!]

Strictly speaking, we provide online access to our AIPs, so in a way they act as DIPs. We just don't, for example, ingest a JPG, create a TIFF for preservation and then create another JPG for access. Storage is a consideration for us, as is the processing overhead that we would have to undertake if we wanted to do access right (for example, for video, which would need a multiplicity of formats to be streamable regardless of end user browser or device), as is MLibrary's longstanding philosophy that guides our preservation practices:

to unite preservation with access.

As it was put to me by a former colleague (now at the National Energy Research Scientific Computing Center, or

NERSC):

This has put us to some degree at odds with practices that are (subjectively, too) strictly based on OAIS concepts, where AIPs and DIPs are greatly variant, DIPs are delivered, and AIPs are kept dark and never touched.

Our custom systems - both DLXS and HathiTrust - deliver derivatives that are created on-the-fly from preservation masters, essentially making what in OAIS terms one might call the DIP ephemeral, reliably reproducible, and even in essence unimportant. (We have cron jobs that purge derivatives after a few days of not being used.) That design is deliberately in accordance with the preservation philosophy here.

Our DSpace implementation is the exception due to the constraints of the application, but it's worth noting we've generally decided *against* approaches that we could have taken that would have involved duplication, such as a hidden AIP and visible DIP (when I asked this question, I was in "total DIP mode"), and I think that is again a reflection of the engrained philosophy here. We've instead aimed for an optimized approach, preserving and providing content in formats that we believe users will be able to deal with.

"To some degree." "Subjectively." "In essence unimportant." Even though this all sounds very reasonable, I'm not sure that my former colleague realizes that we're living in a dystopia, and that Big Brother is watching! You can't just say stuff like that! 2 + 2 = 5!

There's more that I could say about OAIS (e.g., that it assumes that information packages are static in a way that hardly ever reflects reality, and that it doesn't focus enough on engaging with content creators and end users), but that's a post for another day.

3. Society is hierarchical, and divisions between the upper, middle and lower class are definitive and unbending.

|

| In the novel Brave New World, written in 1931 by Aldous Huxley, a class system is prenatally designated in terms of Alphas, Betas, Gammas, Deltas and Epsilons, with the lower classes having reduced brain-function and special conditioning to make them satisfied with their position in life. [5] |

A last characteristic of dystopias is that they are hierarchical, and you can't do anything about it. And let's face it, our digital curation society is hierarchical. We all look to the same big names and institutions, and as someone who came from a small- to medium-sized institution, and as someone who now works at an institution without our own information technology infrastructure, I can tell you first hand that digital preservation, at least at first, can seem like a "rich person's game." For the "everyman" institution (to use a literary trope often found in dystopian fiction, with apologies for it not being inclusive) with some or no financial resources, or without human expertise, it can be hard to know where to start, or even make the case in the first place for something like digital preservation that by its very nature doesn't have any immediate benefits.

As an aside (my argument is going to fall apart!), I think this "class system" is more psychological than anything else. If you are that "everyman" institution, there's a ton that pretty much anyone can do to get started. If you're looking for inspiration, here it is:

- You've Got to Walk Before You Can Run: Ricky Elway’s report addresses some of the very basic challenges of digital preservation in the real world.

- Getting Started with Digital Preservation: Kevin Driedger and myself talk about initial steps in the digital preservation "dance."

- 'Good Enough' Really Is Good Enough: Mike and myself (in my old stomping grounds!), and our colleague Aaron Collie make the case that OAIS-ish, or 'good enough,' is just that. You don't have to be big to do good things in digital preservation.

- National Digital Stewardship Alliance Levels of Preservation: I like this model because it acknowledges that you don't have to jump into the deep end with digital preservation. Instead, the model moves progressively from "the basic need to ensure bit preservation towards broader requirements for keeping track of digital content and being able to ensure that it can be made available over longer periods of time."

- Children of Men: Theo Faron, a former activist who was devastated when his child died during a flu pandemic, is the "archetypal everyman" who reluctantly becomes a savior, leading Kee to the Tomorrow and saving humanity! Oh wait...

Enter Archivematica, the Protagonist

It is within this dystopian backdrop that we meet Archivematica, our protagonist. Archivematica is a web- and standards-based, open-source application which allows institutions to preserve long-term access to trustworthy, authentic and reliable digital content. And according to their website, Archivematica has all of the makings of a hero who will lead the way in our conflict against the opposing dystopian force:

Not only is it in compliance with the OAIS Functional Model (there it is again!), it uses well-defined metadata schemes like

METS,

PREMIS,

Dublin Core and the Library of Congress

BagIt Specification. This makes it very interoperable, which is why we can use it in our Archivematica-ArchivesSpace-DSpace Workflow Integration project.

- It's built on microservices.

Microservices is a software architecture style, in which complex applications are composed of small, highly decoupled and independent processes. When you find a better tool to do a particular job, you can just replace one microservice with another rather than the whole software package. This type of design was highly influential in

AutoPro.

- It is flexible and customizable

Archivematica provides several decision points that give the user almost total control over processing configurations. Users may also preconfigure most of these options for seamless ingest to archival storage and access:

|

| Processing Configuration |

- It is compatible with hundreds of formats.

Archivematica maintains a Format Policy Registry (FPR). The FPR is a database which allows Archivematica users to define format policies for handling file formats, for example, the actions, tools and settings to apply to a file of a particular file format (e.g., conversion to a preservation format, conversion to an access format).

- It is integrated with third-party systems.

- It has an active community.

- It improves and extends the functionality of AutoPro.

This one relates only to us, but Archivematica (

with two notable exceptions) is more scalable, handles errors better and is easier to maintain than our homegrown tool AutoPro, which we've been using for the last three to five years or so to process digital materials.

- It is constantly improving.

This is a big one. Artefactual Systems, Inc., in concert with Archivematica's users, are constantly improving the application. The fact that whenever one person or institution contributes resources, the entire community benefits was a big motivation for our involvement. You can even monitor the

development roadmap to see where they're headed!

Archivematica's Character Flaws

That's a lot about what makes Archivematica awesome. But it's not perfect. In literature, a character flaw is a "limitation, imperfection, problem, phobia, or deficiency present in a character who may be otherwise very functional."

[5] Archivematica's character flaws may be categorized as minor, major and tragic.

Minor Flaws

Minor flaws serve to distinguish characters for the reader, but they don't usually affect the story in any way. Think Scar's scar from

The Lion King, which services to distinguish him (a bit) from the archetypal villain, or the fact King Arthur can't count to three in

Monty Python and the Holy Grail (the

Holy Hand Grenade of Antioch still gets thrown!).

I can think of these:

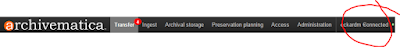

- The responsive design is nice (even though I can't think of a time I'd ever be arranging anything on my cell phone), but the interface has something akin to Scar's scar.

|

| I don't know why the overlap between the button next to my username and "Connected" bothers me so much, but it does. |

- Also, who names their development servers after mushrooms?

Major Flaws

Major flaws are much more noticeable than minor flaws, and they are almost invariably important to the story's development. Think Anakin Skywalker's anger and fear of losing his wife Padme, which eventually consume him, leading to his transformation into Darth Vader, or Victor Frankenstein's excessive curiosity, leading to the creation of the monster that destroys his life.

Indeed, Archivematica has a few flaws that are important to this story's development.

Storage

Archivematica indexes AIPs, and can output them to a storage system, but, as the recent Preserving (Digital) Objects with Restricted Resources (POWRR)

White Paper suggests, Archivematica is not a storage solution:

|

| Notice all the gray above Storage? |

If you're interested in long-term preservation, your digital storage system should be safe and redundant, perhaps using different storage mediums, with at least one copy in a separate geographic location. Since Archivematica does not store digital material, the onus is on the institution to get this right.

To be fair, from the beginning, Archivematica has not focused on storage, instead focusing on producing--and later, indexing--a very robust AIP and integrating with other storage tools such as

Arkivum, DuraCloud, LOCKSS and DuraSpace (including the recent launch of

ArchivesDirect, a new Archivematica/DuraSpace hosted service), and besides those just about any type of storage you can think of. I still feel I have to classify this as a major flaw, though, since quality storage is at the core of a good digital preservation system.

A second major character flaw for Archivematica is that it is not a means for the active, ongoing management aspect of digital preservation, which is really what ensures that digital materials will be accessible over time as technologies change. Again, the POWRR White Paper:

|

| Notice all the gray above Maintenance? |

Archivematica doesn't currently have functionality to perform preservation migrations on AIPs that have already been processed. Even though I'd argue that this isn't as central to long-term preservation as quality storage is, it will eventually become an issue for institutions trying to maintain accessibility to digital objects over time.

Archivematica also does not have out-of-the-box functionality to do integrity checks on stored digital objects. Even though I have to admit that after recording an initial message digest, I haven't actually heard of a lot of "everyman" institutions performing periodic audits or doing them in response to a particular event, this seems like a deficiency in the area of file fixity and data integrity.

Documentation

I should have included this in last week's post about ArchivesSpace as well. Documentation issues are a "known issue" with many open source projects, and ArchivesSpace and Archivematica are no different. There have been a number of times where I have looked for some information on the Archivematica wiki (for example, on the Storage Service API, Version 1.4, etc.) and have found the documentation to be missing or incomplete. Lack of documentation can be a real barrier to implementation.

On the upside, documentation is something we can all contribute to (even if we aren't coders)! I for one am going to be looking into this, starting with

this conversation.

An update! That was fast!

And this one:

Initial QC on Significant Characteristics

Some digital preservation systems and workflows perform checks on significant characteristics of the content of digital objects before and after normalization or migration. For example, if you're converting a Microsoft Word document to PDF/A, a system or workflow might check the word count on either end of that transformation. Currently, the only quality control that Archivematica does is to check that a new file exists, and that its size isn't zero (i.e., that it has data).

However, it is possible to add quality control functionality in Archivematica, it just isn't well documented (see the above). In the FPR, you can define verification commands above and beyond the basic default commands. There's some more homework for me.

Reporting

While Archivematica produces a lot of technical metadata about the digital objects in your collections, there isn't really a way to manage this information or share it with administrators via reports. Even basic facts, such as total extent or size of collection and distribution of file types or ages are not available in a user friendly way. This is true about collections as a whole, but also for individual Submission Information Packages (SIPs) or AIPs.

Two recent developments are worth mentioning. First, there's a tool Artefactual Systems developed for Museum of Modern Art (MoMA): Binder (which just came out this week!). It solves this problem, for example, by allowing you to look a the technical metadata of digital objects in a graphical user interface, run and manage fixity checks of preserved AIPs (receiving alerts if a fixity check fails), and generating and saving statistical reports on digital holdings for acquisitions planning, preservation, risk assessment and budget management. Actually, it does

even more than that, so be sure to check out the

video. We can't wait to dig into Binder.

The second development has to do with our project! Part of the new

Appraisal and Arrangement tab will be new reporting functionality to assist with appraisal. This will (we hope!) include technical information about the files themselves--some code may be borrowed from Binder--as well as information about Personally Identifiable Information (PII):

|

| Transfer Backlog Pane |

Tragic Flaws

Tragic flaws are a specific sort of flaw in an otherwise noble or exceptional character that bring about his or her own downfall and, often, eventual death. Think Macbeth's hubris or human sin in Christian theology.

While all of this is a little dramatic for our conversation here, there is one very important thing that Archivematica doesn't do:

Archivematica does NOT ensure that we never lose anything digital ever again.

Besides the fact that Archivematica suffers from all of the

same "essential" difficulties in software engineering as ArchivesSpace (namely, complexity, conformity, changeability and invisibility--and for pretty much all of the same reasons, I might add), it is also not some kind of comprehensive "silver bullet" that will protect our digital material for all time. It's just not, which leads me to...

The Reveal! Why All of This is OK with Us

Actually, there is no such thing as a "comprehensive" digital preservation solution, so we can't really hold this against Artefactual Systems, Inc. Anne R. Kenney and Nancy McGovern, in "The Five Organizational Stages of Digital Preservation," say it best:

Organizations cannot acquire an out-of-the-box comprehensive digital preservation program— one that is suited to the organizational context in which the program is located, to the materials that are to be preserved, and to the existing technological infrastructure. Librarians and archivists must understand their own institutional requirements and capabilities before they can begin to identify which combination of policies, strategies, and tactics are likely to be most effective in meeting their needs.

Just like ArchivesSpace, Archivematica has a lot going for it. We are especially fond of its microservices design, its incremental agile development methodology, and its friendly and knowledgeable designers.

We love the fact that Archivematica is open source and community-driven, and we try to participate as fully as we can to that community, and intend to do so even more in the future. We do that financially, obviously, but also by participating on the

Google Group, and contributing

user stories for our project and ensuring that the code developed for it will be made available to the public. You should too!

Conclusion: The Purpose of Dystopian Fiction

To have an effect on the reader, dystopian fiction has to have one other trait: familiarity. The dystopian society must call to mind the reader's own experience. According to

Jeff Mallory, "if the reader can identify with the patterns or trends that would lead to the dystopia, it becomes a more involving and effective experience. Authors use a dystopia effectively to highlight their own concerns about society trends." Good dystopian fiction is a call to action in the present.

By focusing on automating the ingest process and producing a repository agnostic, normalized, and well-described (those METS files are huge!) AIP, and doing so in such a way that institutions of all sizes can do a lot or even a little with digital preservation, Archivematica addresses those concerns really well. That, coupled with the fact that staff there are also active in other community initiatives, such as the

Hydra Metadata Working Group and

IMLS Focus, definitely make them not only protagonists, but heroes in this story.

In the end, Archivematica is our call to action to be heroes in this story as well!

[1] Is it Dystopia? A flowchart for de-coding the genre by Erin Bowman is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License. Based on a work at www.embowman.com. Feel free to share it for non-commercial uses.

[2] Dystopia (this version)

[3] "The Matrix Poster" by Source. Licensed under Fair use via Wikipedia - http://en.wikipedia.org/wiki/File:The_Matrix_Poster.jpg#/media/File:The_Matrix_Poster.jpg

[4] "1984first" by George Orwell; published by Secker and Warburg (London) - Brown University Library. Licensed under Public Domain via Wikipedia - http://en.wikipedia.org/wiki/File:1984first.jpg#/media/File:1984first.jpg

[5] "BraveNewWorld FirstEdition" by Source. Licensed under Fair use via Wikipedia - http://en.wikipedia.org/wiki/File:BraveNewWorld_FirstEdition.jpg#/media/File:BraveNewWorld_FirstEdition.jpg

[6] Character flaw (this version)